AI leadership is about merging human expertise with AI to create smarter, faster, and more ethical organizations. Companies that succeed with AI don’t just adopt technology - they rethink decision-making, train teams to integrate AI into workflows, and prioritize ethics. For example, Moderna used AI to design a COVID-19 vaccine candidate in just two days, while Sanofi improved therapy target identification by 20–30%.

Key takeaways for building effective AI leadership:

- Data-Driven Decisions: Use AI insights to complement human judgment.

- AI-First Mindset: Leaders must actively engage with AI to spot opportunities and challenges.

- Ethics and Trust: Establish clear frameworks to ensure responsible AI use.

- Training and Collaboration: Equip teams with AI skills and foster human-AI synergy.

- Measurable Goals: Track AI’s impact on business outcomes like ROI and efficiency.

The stakes are high - organizations with strong AI leadership can see up to 3.6× greater impact on their bottom line. Are you ready to lead with AI?

How to Use AI for Strategic Leadership (Not Just Tasks) with Geoff Woods

sbb-itb-e314c3b

What Is AI Leadership?

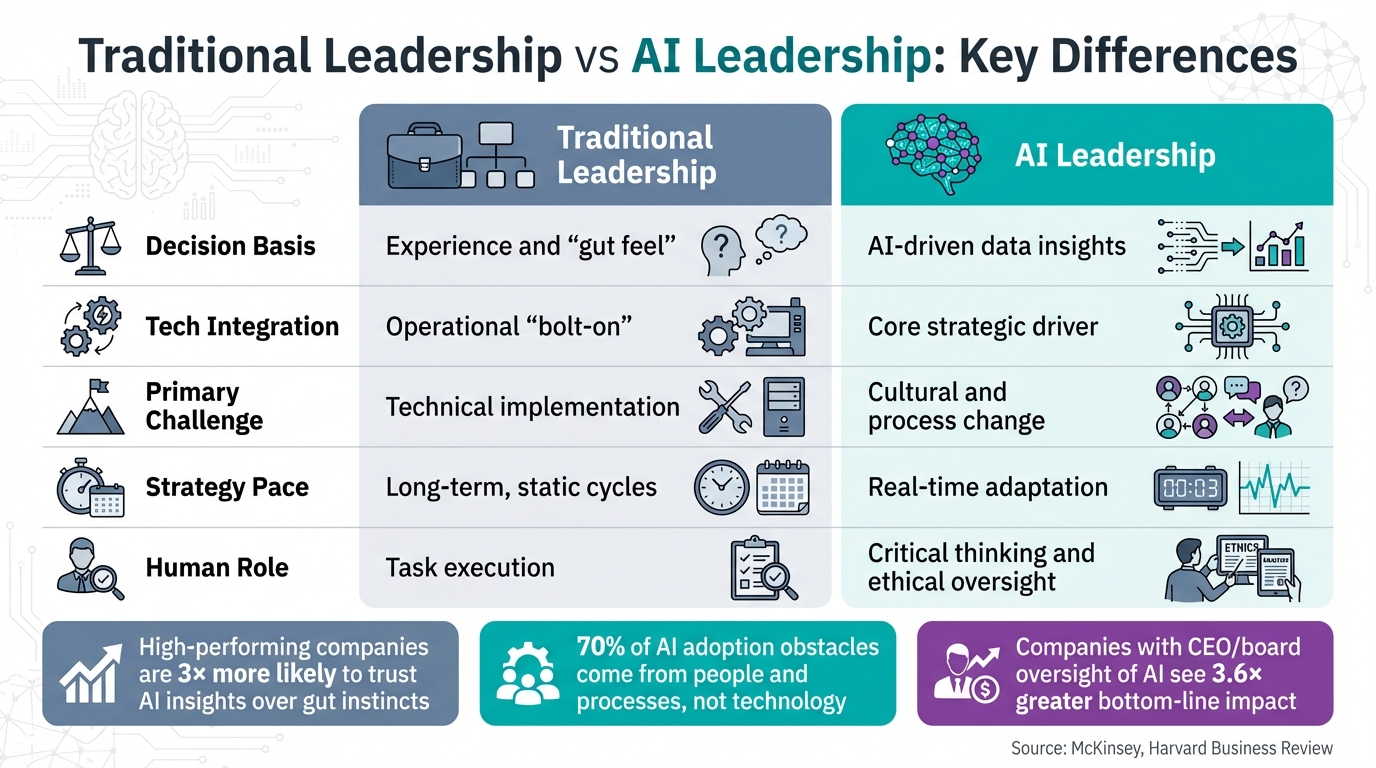

Traditional Leadership vs AI Leadership: Key Differences

AI leadership represents a shift in how organisations integrate technology and human potential. Instead of treating AI as a simple tool or add-on, it focuses on creating an environment where human expertise and AI capabilities complement each other seamlessly [5]. Unlike traditional leadership, which often relies on intuition and past experience, AI leadership is grounded in data-driven decision-making. For example, high-performing companies are three times more likely to trust AI insights over gut instincts [5]. This approach also requires agility, as operations increasingly rely on autonomous systems that demand real-time adjustments.

Interestingly, the biggest challenges in adopting AI aren't technical. Around 70% of obstacles come from issues related to people and processes, not technology. Furthermore, 91% of data leaders in large companies cite cultural resistance and change management as the main barriers to becoming data-driven, with only 9% pointing to technology [4][5]. IKEA offers a case study in tackling these challenges: between 2024 and 2025, they formed a multidisciplinary AI governance team, including technologists, legal experts, policy advisors, and designers. This team ensured that AI initiatives aligned with business goals while maintaining ethical standards [5]. Another telling statistic? Companies where the CEO or board directly oversees AI initiatives experience a 3.6× greater impact on their bottom line [5]. McKinsey aptly sums up the situation:

"The biggest barrier to scaling AI is not employees - who are ready - but leaders, who are not steering fast enough" [5].

These insights highlight the principles that set AI leadership apart from traditional management.

Core Principles of AI Leadership

Three key principles define effective AI leadership, making it distinct from conventional leadership styles.

1. Combine data insights with human judgement.

This principle doesn't mean replacing human intuition but enhancing it. Leaders should shift their mindset from "What does my experience tell me?" to "What do the data reveal that I might have overlooked?"

2. Promote a culture of innovation and experimentation.

Leaders need to actively use and advocate for AI tools, showing their commitment to transformation. Creating a safe space for teams to experiment - and to let go of outdated practices - is crucial. Transparency plays a big role here. As Harvard Business Review notes:

"Employees won't trust AI if they don't trust their leaders" [5].

3. Build strong ethical frameworks from the start.

Ethics can't just be a buzzword. Organisations need clear, actionable Responsible AI programmes with robust governance. IKEA’s multidisciplinary AI governance team demonstrates how diverse expertise can balance innovation with ethical responsibility [5].

| Feature | Traditional Leadership | AI Leadership |

|---|---|---|

| Decision Basis | Experience and "gut feel" | AI-driven data insights [5] |

| Tech Integration | Operational "bolt-on" | Core strategic driver |

| Primary Challenge | Technical implementation | Cultural and process change [5] |

| Strategy Pace | Long-term, static cycles | Real-time adaptation |

| Human Role | Task execution | Critical thinking and ethical oversight [4] |

While these principles are essential, the human element remains at the heart of AI leadership.

The Human Element in AI Leadership

At its core, AI leadership is about people. As Alex Milovanovich puts it:

"Success lies not in asking what AI can do instead of humans, but in discovering what humans and AI can achieve together that neither could accomplish alone" [4].

A cautionary tale underscores this point. In November 2021, Zillow shut down its "Zillow Offers" home-buying division after its AI-driven property valuation algorithms failed to account for market complexities. This misstep, which ignored the need for human oversight, led to a $300 million loss and a 20% drop in stock value [4].

While AI excels at processing massive datasets, it lacks the creativity, adaptability, and nuanced understanding that humans bring to decision-making [7]. Effective AI leadership focuses on using AI to support, not replace, human contributions. Skills like ethical reasoning, emotional intelligence, and contextual judgement remain uniquely human strengths that AI cannot replicate.

To ensure successful collaboration between humans and AI, leaders must prioritise reskilling their teams. Critical thinking and emotional intelligence are essential traits for navigating this new landscape. Additionally, organisations should invest meaningfully in Responsible AI programmes, going beyond surface-level ethics checklists. By doing so, they can minimise technical errors and achieve stronger business outcomes [6].

Key Traits of Successful AI Leaders

What sets successful AI leaders apart? It's not just about mastering algorithms. The real difference lies in blending technical expertise with the ability to rethink workflows, modernize outdated processes, and make ethical decisions. These leaders balance human intuition with data-driven insights to guide their organizations effectively.

AI Literacy and Technical Knowledge

To lead effectively in the AI space, having a solid grasp of the technology is essential. But AI literacy goes beyond theoretical knowledge - it’s about hands-on experience. Leaders who excel don’t just talk about AI; they actively use it in their daily lives, both at work and at home. Jean-Philippe Courtois, a former Microsoft executive, emphasizes this point:

"Use AI every day... in your personal and in your professional life" [9].

This regular interaction with AI sharpens a leader’s ability to spot inefficiencies and identify weak points, like hallucinations or poor data quality [9]. By understanding these limitations firsthand, leaders can make better decisions about where AI fits and where it doesn’t [9][10].

Flexibility and Continuous Learning

Alongside technical expertise, adaptability is crucial. The pace of AI advancements is relentless, and leaders must keep up. Consider this: in 2025, 42% of companies abandoned most of their AI projects, compared to just 17% in 2024 [5]. While 78% of leaders think they’ve mastered AI, only 39% of employees share that confidence [8]. This disconnect highlights the need for leaders to continually evolve.

Successful AI leaders don’t just tack AI onto existing systems - they reimagine processes entirely. For example, Jean-Philippe Courtois replaced a rigid "inspection culture" at Microsoft with a coaching model supported by digital dashboards. This shift allowed managers to focus on understanding customer needs rather than just forecasting [9]. Similarly, SAP’s CFO Dominik Asam revamped core operations to integrate generative AI, automating routine tasks and enabling teams to focus on more strategic work [9].

Patience also plays a role. Seventy-six percent of successful AI leaders give projects at least a year to address ROI or adoption challenges before considering budget cuts [5]. They foster environments where teams feel safe to experiment and learn from mistakes. As Dr. Dorottya Sallai of LSE explains:

"AI adoption is a cultural transition, one that requires overcoming psychological and leadership barriers more than technical ones" [5].

Ethical Leadership and Responsibility

Ethics in AI isn’t just about compliance - it’s central to building trust and achieving long-term success. Around 25% of managers report AI failures, ranging from technical glitches to decisions that harm communities [6]. Companies with well-developed ethical frameworks are 2.5 times more likely to gain customer trust [5].

Ethical AI leadership involves creating a culture that prioritizes safety and learning over rigid control. Leaders must carefully decide when to automate tasks and when to rely on human judgment, preserving qualities like ethical reasoning and contextual understanding [9][4]. Notably, 52% of organizations now involve their boards in drafting AI ethics policies, signaling that accountability has moved beyond IT departments to the highest levels of governance [5].

These qualities are critical as organizations weave AI into their overall strategies, ensuring both innovation and responsibility go hand in hand.

How to Build AI Leadership in Organizations

Organizations aiming to lead in AI must go beyond hiring technical experts. They need a structured strategy that integrates AI leadership at every level. The disparity in confidence between leaders and employees is striking - while 78% of leaders feel confident in their AI mastery, only 39% of employees agree [8]. Bridging this gap requires clear frameworks and actionable plans.

Creating an AI-Driven Culture

To close the gap between leadership and employees, organizations must focus on reshaping their culture. This transformation starts with leadership but must extend throughout the organization. Take Moderna's CEO Stéphane Bancel as an example. In April 2024, he encouraged employees to use ChatGPT 20 times daily - not just to embrace AI but to make it a routine part of their work [12]. Leaders can set the tone by sharing their own AI experiences, including challenges and lessons learned.

The "5 Principles" framework offers a practical guide:

- Align: Define a clear vision and adoption goals.

- Activate: Provide structured training and designate "AI Champions."

- Amplify: Highlight successes and create knowledge-sharing hubs.

- Accelerate: Remove barriers to accessing tools and data.

- Govern: Establish safeguards to encourage responsible innovation [12].

IBM's marketing team demonstrated this approach in 2023 by rethinking their campaign process with AI. They reduced the time to create a marketing banner ad from six weeks to just 60 seconds, and the results were three times more effective than their previous methods [14].

Psychological safety is just as important as technical readiness. Dr. Dorottya Sallai from LSE explains:

"AI adoption is a cultural transition, one that requires overcoming psychological and leadership barriers more than technical ones" [5].

Organizations can foster this safety by creating controlled testing environments where failure is part of the learning process. For instance, DBS Bank in Singapore tackled data access issues by building a platform with role-based permissions for data scientists. They also introduced "Data Storyteller" roles to connect data insights with business decisions, leading to AI-powered credit scoring and fraud detection systems that improved efficiency [13].

Training Teams in AI Capabilities

AI training should be ongoing rather than a one-time event. The San Antonio Spurs achieved an impressive leap in AI fluency - from 14% to 85% - during the 2024–2025 season by embedding training into daily workflows rather than treating it as an isolated activity [12].

Developing "AI Shapers" is key. These individuals integrate AI into daily operations, driving adoption and tangible results [16]. Midlevel leaders play a crucial role by identifying opportunities at the frontline that might otherwise be missed [1]. Tailored, role-specific training often proves more effective than generic, organization-wide sessions [5]. For example, The Estée Lauder Companies launched a centralized "GPT Lab" in 2024–2025, gathering over 1,000 employee ideas. They turned the most promising concepts into prototypes and scaled them across the company [12].

Experimentation should be routine. Setting aside dedicated time - like one Friday a month - for teams to explore AI improvements or participate in no-code hackathons encourages continuous innovation. Tying AI engagement to performance reviews and career progression emphasizes its strategic importance [12]. Early adopters of AI are already seeing revenue grow 1.5 times faster than their peers [12].

These training efforts lay the groundwork for effective human-AI collaboration.

Measuring Human-AI Collaboration

To improve human-AI collaboration, organizations need to measure it effectively. This involves rethinking workflows, decision-making processes, and management practices to maximize the strengths of both humans and AI [3][11]. Simply layering AI onto existing systems often leads to failure, as evidenced by the 95% failure rate of AI pilots [14]. Instead, workflows should be redesigned with an AI-first mindset.

Accountability is crucial. Assign specific individuals or teams to oversee an AI system's behavior and impact, giving them the authority to resolve issues as they arise [15]. For example, JP Morgan took this step in April 2025 by appointing a Head of AI Policy who reports directly to the CEO. The Chief Information Security Officer also issued a public letter to third-party suppliers outlining AI standards. Additionally, the company established a Responsible AI governance function with over 20 specialized staff members [15].

Cross-functional governance boards, including representatives from legal, risk, ethics, and operations, can ensure thorough review of high-impact AI use cases. Mastercard, for example, created an AI Governance Council and collaborated with the Quebec Artificial Intelligence Institute (Mila) to advance research on bias testing and mitigation for real-world applications [15].

Regular audits for unintended bias are essential. If an AI decision can't be explained to non-experts, it won't be trusted by the people relying on it [15]. Publishing an AI roadmap that links every use case to a strategic goal and sharing it widely within the organization can build trust and alignment [5]. When CEOs or boards take direct oversight of AI initiatives, organizations see a 3.6-fold increase in bottom-line impact. By the end of 2025, 74% of advanced generative AI initiatives were meeting or exceeding ROI targets - a clear indicator of the value of structured measurement and accountability [5].

Examples of AI Leadership in Practice

Let’s dive into some real-world examples that highlight how AI leadership is shaping industries today. The most impactful initiatives share a few key elements: involvement from top executives, rethinking workflows, and a laser focus on measurable results. These cases prove that AI leadership goes beyond technology - it's about reshaping how organisations operate.

Corporate Sector: Data-Driven Decision-Making

IBM's transformation between 2023 and 2026 is a prime example of AI-first leadership. The CEO set an ambitious goal to make IBM "the most productive company in the world", resulting in over €3.3 billion in value creation. Jean-Stephane Payraudeau, Managing Partner for Business Operations at IBM, emphasizes:

"Success with AI depends on leaders who can reimagine how their organisations work, redesigning processes, integrating data and empowering people to unlock AI's full potential" [14].

Sanofi, a pharmaceutical leader, embarked on a similar journey starting in 2019. Under CEO Paul Hudson, the company revamped its R&D and operations, demonstrating how leadership can drive innovation [2]. Research backs this up: companies with Chief Strategy Officers actively involved in tech decisions are up to 88 times more likely to see high returns across financial, customer, and operational metrics [17].

In the public sector, the Saudi Data & AI Authority (SDAIA) shows the transformative power of AI leadership. Since its establishment in 2019, SDAIA has launched initiatives like the "National Data Bank" and the "Estishraf" analytics platform, which supports government decision-making. By 2023, these tools were used by over 85 government entities, generating approximately €11.8 billion (50 billion SAR) in value [2]. Their success came from building a strong, interoperable digital foundation rather than focusing on isolated tech solutions. As one industry analysis puts it:

"Trying to scale AI across your organisation without a strong digital core is like driving a sports car with a decrepit engine - you may look good for the first mile, but you won't go very far" [2].

Among mid-sized firms with revenues around €900 million, 17% reported revenue or cost improvements exceeding 10% from AI initiatives [18]. Additionally, companies where the CFO has full authority over digital investments are more than twice as likely to outperform on profitability - 42% compared to 18% [17].

While corporate leaders focus on leveraging AI for strategic gains, the healthcare sector is using it to directly enhance patient care.

Healthcare: Improving Patient Outcomes with AI

AI leadership in healthcare comes with its own set of challenges, requiring a delicate balance between clinical expertise, operational efficiency, and patient safety. Studies show that clinician-led AI projects are far more likely to deliver measurable clinical benefits compared to those driven solely by technologists [20].

AI-powered "Command Centres" are a standout innovation, offering hospitals real-time insights into bed capacity. These systems help staff anticipate bottlenecks, prioritise patient transfers, and streamline discharges [19]. By revealing patterns and insights that might otherwise go unnoticed, these tools enhance clinical decision-making. Meanwhile, generative AI assistants are lightening administrative workloads by automating tasks like drafting patient documents and organising clinical notes [20].

In the pharmaceutical world, Roche has used AI to significantly cut patent research costs. By implementing a scalable AI framework, their legal teams can now work faster and with greater precision, showcasing how AI leadership can elevate specialised fields like intellectual property [21].

These examples illustrate the diverse ways AI leadership is driving change across industries, setting the stage for deeper discussions at the RAISE Summit.

AI Leadership at RAISE Summit

Leading organisations today understand that effective AI leadership goes beyond mastering technical skills. It’s about creating environments where leaders can exchange ideas, rethink strategies, and form partnerships that drive AI initiatives from experimental stages to full-scale implementation. The RAISE Summit is designed to meet these needs.

What RAISE Summit Offers

Held annually at the Carrousel du Louvre in Paris, the RAISE Summit brings together over 9,000 global leaders to focus on AI strategies and innovation [24]. The event is highly exclusive, with 80% of attendees being C-level executives, founders, investors, or policymakers [24][25]. In 2025, the summit hosted 822 CEOs from 168 Fortune 500 companies, alongside investors managing assets worth over €560 billion [24].

The summit's agenda is structured around the "4Fs" framework - Foundation, Frontier, Friction, and Future. This approach tackles a wide range of topics, from sovereign cloud infrastructure to addressing CFOs' concerns about AI’s return on investment [23]. To foster meaningful collaboration, the event includes an invitation-only CxO Summit tailored for Fortune 1000 leaders and an AI-powered platform that facilitates targeted, one-on-one meetings [24][25]. Co-founder Hadrien de Cournon highlights this emphasis on tangible outcomes:

"The CxO Summit exists so companies don't just talk about AI, they leave RAISE with real partnerships, pilots, and signed deals" [24].

Beyond the main conference sessions, the summit also hosts the world’s largest AI hackathon, involving 7,000 developers, and a startup competition that connects emerging businesses with leading venture capitalists [24]. Additionally, Side Events Week expands networking opportunities across Paris, featuring exclusive dinners and workshops for deeper engagement [23][25].

The summit doesn’t stop at networking - it also delivers actionable knowledge through its focused learning tracks.

Learning Opportunities for AI Leaders

The 2026 programme includes over 547 speakers, such as Mark Cuban, Vlad Tenev (CEO of Robinhood), and Mark Papermaster (CTO of AMD), offering attendees a chance to learn directly from peers and industry leaders [23]. For example, in July 2025, GSK’s Danielle Belgrave and Cerebras Systems’ Natalia Vassilieva presented "Molecules at Wafer-Scale", exploring how wafer-scale computing accelerates drug discovery. Similarly, Sanofi’s Bruno Gagliardo and Snowflake’s Larry Orimoloye shared insights on data-driven collaboration in their session, "From Molecule to Market Value" [22][26].

Tracks like Friction focus on workforce transformation and realising value from AI investments, while Frontier addresses the challenges of scaling AI projects from pilots to full adoption [23]. Sessions such as "The ROI Dilemma" and "Value Realization & Capital Allocation" provide practical tools for justifying AI investments. As Eric Schmidt aptly puts it:

"The fastest-growing AI Tech conference in Europe, and maybe in history" [23].

Conclusion

AI leadership isn't just about mastering cutting-edge technology - it’s about weaving human values into every decision, building trust, and ensuring that initiatives align with your organisation’s core purpose. As Dr. Dorottya Sallai from LSE wisely notes:

"AI adoption is a cultural transition" [5].

This transition demands that leaders focus on people and processes, not just technical expertise. The evidence is clear: organisations with well-defined ethical frameworks earn 2.5× more customer trust and experience 50% fewer failures when scaling Responsible AI [5][28]. On the flip side, neglecting the human element has led 42% of firms to abandon most AI initiatives by 2025 [5].

The takeaway here? You can’t scale AI faster than you can scale trust [27]. Building trust means communicating transparently, equipping your teams with the skills to navigate AI confidently, and ensuring every decision is explainable - even to non-experts. Kevin Werbach captures this perfectly:

"If a decision can't be explained, it can't be trusted" [15].

So, how do you put this into practice? Stay curious. Engage with industry events and commit to lifelong learning. AI is evolving at a breakneck pace, and staying ahead means embracing opportunities like the RAISE Summit, which provides actionable insights, practical frameworks, and case studies from industry leaders. These resources can help you move from tactical execution to strategic influence.

Whether your organisation is just starting with AI or scaling enterprise-wide deployments, the formula for success remains the same: lead with purpose, embed ethics into your strategy, and keep people at the heart of every decision. Technology will keep advancing, but your dedication to responsible leadership will determine whether your organisation thrives - or becomes part of the 95% of AI pilots that don’t succeed [14].

FAQs

Where should we start with AI leadership in our organisation?

To lead effectively in an AI-driven world, it’s crucial to start with a clear strategic vision. This means focusing on aligning AI initiatives with your organisation’s goals, encouraging experimentation, and rethinking traditional processes to integrate AI seamlessly.

Equally important is prioritising human leadership skills - like decision-making, empathy, and trust-building - that AI simply cannot replicate. These qualities form the backbone of strong leadership in a tech-enhanced environment.

Creating a culture of innovation is another essential step. By clearly communicating AI objectives and ensuring teams understand the "why" behind these goals, organisations can make their AI projects not only impactful but also sustainable in the long term.

The real magic happens when you combine human strengths - like creativity and ethical reasoning - with the efficiency and precision of AI. This balance is the cornerstone of effective AI leadership.

How do we measure ROI and adoption for AI initiatives?

Evaluating the success of AI initiatives requires looking at both financial outcomes and organisational impact. When it comes to ROI, key indicators include revenue growth, cost reductions, and the speed at which results are achieved. These metrics provide a clear picture of the financial benefits AI brings to the table.

On the organisational side, adoption is measured by factors such as user engagement, how well AI tools are integrated into existing workflows, and the extent of team-wide usage. These elements highlight how effectively AI is being embraced across the company.

Leadership plays a crucial role here. For instance, having a Chief AI Officer can make a big difference. This role ensures that AI strategies are directly aligned with the company’s broader business objectives, maximizing both adoption and ROI.

By combining financial metrics with organisational ones, businesses can get a more complete understanding of how their AI initiatives are performing.

What does a practical Responsible AI governance setup look like?

A practical approach to Responsible AI governance ensures that AI systems operate in a way that is ethical, safe, and in line with an organisation's core values. This involves establishing clear accountability and implementing operational measures, such as tracking AI agents and setting up runtime safeguards to minimise risks and prevent harm.

For governance to be effective, it must seamlessly integrate into production environments. This includes real-time oversight, robust issue management processes, and the collection of evidence to demonstrate compliance with relevant standards. Additionally, well-defined policies, supported by lifecycle checkpoints, help maintain control over AI systems from development to deployment.

Engaging a diverse group of stakeholders is another key element. By involving different perspectives, organisations can foster trust and remain agile in adapting to changing regulations and expectations.