Artificial General Intelligence (AGI) refers to AI systems capable of performing tasks across various domains with human-like adaptability. Unlike sci-fi portrayals of instant superintelligence or sentient robots, progress toward AGI is gradual, marked by engineering milestones rather than dramatic leaps. Here’s what you need to know:

- Progress is incremental: By late 2025, GPT-5 achieved 57% on AGI-related benchmarks, showing improvements in reasoning and problem-solving but still far from human-level cognition.

- Limits remain: AI struggles with long-term memory, physical reasoning, and adapting to new tasks without retraining.

- Key benchmarks: Challenges like brewing coffee (The Coffee Test) or assembling furniture (The Ikea Test) highlight the gaps in AI's general problem-solving ability.

- Engineering challenges: Computational power, energy demands, and "catastrophic forgetting" hinder development, while geopolitical tensions complicate access to resources like advanced chips.

- Safety concerns: Misuse risks, alignment issues, and uneven performance underscore the need for cautious development.

- Timelines: Experts estimate AGI may reach human-level performance by 2030, though some predict it could take until 2040 or beyond.

Despite the hype, today’s AI systems are still tools rather than sentient beings. Progress is steady, with each advance bringing us closer to practical applications in science, engineering, and beyond. However, the journey remains complex, requiring collaboration, responsible development, and clear goals.

The Reality of AGI That Everyone Gets Wrong

sbb-itb-e314c3b

What AGI Is: Core Abilities and Science Fiction Myths

Artificial General Intelligence (AGI) refers to systems that can match the cognitive flexibility and skillset of an educated human across a wide range of tasks [1][3]. This definition focuses on functional abilities, such as reasoning, common sense, planning, learning independently, communicating naturally, perceiving the world, and retaining information. Unlike narrow AI, which excels in specific tasks like chess or image recognition, AGI would demonstrate human-like adaptability, transferring skills seamlessly between unrelated areas [7][3].

Current AI systems, however, show a "jagged" cognitive profile [1][3]. They may outperform humans in tasks requiring vast knowledge or mathematical precision but falter in basic areas like long-term memory or understanding the physical world. For instance, even advanced models like GPT-5 still produce incorrect answers in over 30% of cases on the SimpleQA benchmark [3]. A key limitation is that today’s AI models are "frozen" after training, lacking the ability to learn and adapt from new experiences without retraining [3].

"Artificial general intelligence (AGI) is the ability of an intelligent agent to understand or learn any intellectual task that human beings or other animals can." - Andrew Ng, Founder, DeepLearning.AI [8]

It's important to distinguish AGI from "Strong AI." While AGI focuses on achieving human-level functional capabilities, Strong AI implies a machine with sentience or consciousness. In engineering, the focus remains on practical, goal-oriented behavior rather than mimicking human-like awareness [7][3].

Key Benchmarks for AGI

Researchers are moving away from a simple "AGI or not" approach, adopting a tiered framework based on capability and generality [11][5]. For example, Google DeepMind categorizes AGI development into five levels: Emerging (Level 1, like ChatGPT), Competent (Level 2), Expert (Level 3), Virtuoso (Level 4), and Superhuman (Level 5) [4][7]. These levels reflect progress in areas like reasoning and problem-solving, while highlighting the gaps that remain [1][3].

To measure AGI's progress, practical tests assess how well systems handle real-world tasks:

- The Coffee Test: Can the AI enter a home and figure out how to brew coffee? [7]

- The Ikea Test: Can it assemble flat-pack furniture using only the included parts and instructions? [7]

- The Modern Turing Test: Can it turn €93,000 into €930,000 through autonomous decision-making? [7]

These challenges emphasize adaptability and problem-solving in unpredictable environments, moving beyond narrow, task-specific benchmarks.

Recent advancements underscore this progress. OpenAI's o3 model, for instance, achieved human PhD-level performance on the GPQA Diamond scientific reasoning benchmark and solved 25% of highly complex problems on the Frontier Math benchmark by late 2024 [2]. In software engineering tasks, where human developers typically spend an hour, AI performance improved from almost 0% in 2020 to over 70% with the o3 model [2]. These gains reflect advances in emergent reasoning, where models tackle multi-step problems rather than simply retrieving facts. Such progress challenges some of the more speculative narratives surrounding AGI.

Common Sci-Fi Myths About AGI

Despite measurable advancements, public perceptions of AGI are often shaped by myths popularized in science fiction. These stories frequently portray AGI as having human-like consciousness, emotions, or even an inherent drive for dominance. In reality, AGI is far from these depictions. As Henry Farrell explains, large AI models "can never be intelligent in the way that humans, or even bumble-bees are because AI cannot create. It can only reflect, compress, even remix content that humans have already created" [9]. This distinction between pattern recognition and true agency is crucial.

One common myth is the idea of instant superintelligence, where AI suddenly becomes all-powerful. However, progress in AI has been gradual, not a sudden leap. Andrew Ng highlights this with perspective: "In the past year, I think we've made one year of wildly exciting progress in what might be a 50- or 100-year journey" [8]. Most researchers agree that current neural network architectures are inadequate for achieving AGI, with 84% of AAAI members stating this explicitly, and 76% doubting that scaling existing methods will lead to AGI [9].

Another misconception is that AGI will achieve uniform "superintelligence" across all domains. The reality is far more uneven. Gary Marcus explains: "The patterns that [deep learning] learns are, ironically, superficial not conceptual... You can't deal with a person carrying a stop sign if you don't really understand what a stop sign even is" [10]. This highlights the limitations of current systems, which often fail to grasp deeper, conceptual understanding. For instance, an AI might excel at coding but struggle with basic physical reasoning or memory tasks [1].

Finally, timelines for AGI remain a subject of debate. While some industry leaders predict AGI could emerge by 2027–2030, most researchers believe it is more likely around 2040 [7][2]. Historical claims about imminent breakthroughs - like those surrounding IBM Watson in 2011 - serve as a reminder of the repeated cycle of over-promising and under-delivering in AI development [8]. Furthermore, many current AI systems are described as "demoware", performing well in controlled settings but failing in real-world, unpredictable scenarios [9][10].

Current Engineering Progress in AGI Research

Recent advancements are steadily pushing AI systems closer to achieving artificial general intelligence (AGI). These developments focus on enhancing practical capabilities like reasoning, autonomous problem-solving, and scientific contributions, rather than relying on speculative leaps. Researchers are designing architectures that empower models to tackle complex challenges, use tools independently, and assist in groundbreaking discoveries.

Algorithm Advances Driving Progress

AI systems are evolving from basic pattern recognition to more deliberative reasoning. OpenAI's o1 model series, for example, leverages large-scale reinforcement learning to adopt a chain-of-thought approach. This enables the model to work through intricate problems systematically before generating responses:

"The o1 model series is trained with large-scale reinforcement learning to reason using chain of thought." - OpenAI [16]

Another notable milestone came in December 2025 with the release of INTELLECT-3 by the Prime Intellect Team. This 106-billion-parameter Mixture-of-Experts model activates 12 billion parameters during inference, delivering exceptional performance in mathematics and coding. Trained using the open prime-rl framework across 512 H200 GPUs, INTELLECT-3 showcases the scalability of reinforcement learning across multi-processor systems [12].

Progress in generalizing to new tasks has also accelerated significantly. During the ARC Prize competition in December 2024, the ARC-AGI private evaluation set score improved from 33% to 55.5%. This leap was achieved through techniques like deep learning-guided program synthesis and test-time training, which enable models to adapt to unfamiliar challenges:

"The state-of-the-art score on the ARC-AGI private evaluation set increased from 33% to 55.5%, propelled by several frontier AGI reasoning techniques including deep learning-guided programme synthesis and test-time training." - François Chollet, Creator of Keras, Google [14]

Researchers are also integrating broader agentic frameworks into AI systems. By combining foundation models with memory, hierarchical planners, world models, and tool-use capabilities, these systems can maintain context across interactions, break down goals into smaller steps, and execute tasks autonomously. This marks a shift toward creating AI that can function as independent agents [13].

These algorithmic strides are paving the way for impactful real-world applications.

Practical Applications of AGI Precursors

The advancements in algorithms are already making a difference in practical scenarios. For instance, in November 2025, a research team led by Sébastien Bubeck and Timothy Gowers documented that GPT-5 successfully solved four previously unsolved mathematical problems. This demonstrated GPT-5's ability to contribute meaningful insights to ongoing research in fields such as physics, biology, and materials science:

"AI models like GPT-5 are an increasingly valuable tool for scientists... GPT-5 can help human mathematicians settle previously unsolved problems." - Sébastien Bubeck, Lead Author, Microsoft Research/OpenAI [15]

The infrastructure supporting these advancements is also scaling at an unprecedented rate. U.S. cloud providers are expected to invest €570 billion (approximately $600 billion) in AI infrastructure by 2026, doubling their 2024 spending. This rapid growth is fueling a self-reinforcing cycle of development. By late 2025, Anthropic CEO Dario Amodei revealed that the majority of code for new Claude models was authored by the AI itself. By December 2025, Claude's creator confirmed that every update that month was entirely AI-generated [17].

The evolution of AI in software engineering further highlights this acceleration. Claude Opus 4.5, released in November 2025, demonstrated the ability to solve complex software engineering tasks with 50% reliability over five hours. Just two years earlier, AI could only handle two-minute tasks with the same level of reliability [17].

Challenges Blocking AGI Development

Even with rapid advancements, the journey toward AGI (Artificial General Intelligence) remains riddled with obstacles. These hurdles span technical limitations, ethical considerations, and geopolitical tensions that influence how research is conducted.

Computing Power and Resource Constraints

Training advanced AI models demands immense computational power, and this need is only growing. Today, data centres supporting AI account for roughly 1% to 1.5% of global energy usage [19]. Reaching AGI-level capabilities could require exponentially more processing power, which would create enormous energy demands, raising concerns about sustainability and infrastructure readiness [18][19].

But it’s not just about power. Researchers are hitting a wall with diminishing returns - simply scaling up processors and data doesn’t guarantee proportional improvements anymore. Current AI systems also struggle with "catastrophic forgetting", where learning new tasks erases previously acquired knowledge. Unlike humans, who can build on past experiences, models often excel in specific areas but fall short in foundational skills like long-term memory [1][3].

Adding to these challenges are geopolitical factors. Export restrictions on High-Bandwidth Memory (HBM) chips have become a key issue as countries aim to secure their technological edge. The so-called "memory wall" is becoming just as critical as the chips powering the logic itself [3].

These computational constraints are directly tied to the safety concerns that follow.

Safety and Ethical Concerns

Technical limitations naturally lead to stricter safety measures. For example, in 2025, three major AI developers enhanced safeguards for their latest models after internal tests revealed the potential for misuse, such as aiding in the creation of biological weapons [21]. This shift is reflected in the growing number of companies adopting "Frontier AI Safety Frameworks", which more than doubled that same year [21].

Despite these efforts, reliability issues persist. Current models often show uneven performance across tasks, highlighting the gap between controlled lab environments and practical, real-world applications [1][3].

"People want memory. People want product features that require us to be able to understand them." - Sam Altman, CEO, OpenAI [3]

Another challenge is "inner alignment", where an AGI could develop unintended, emergent goals that conflict with human objectives. This risk has shifted research priorities toward alignment techniques, adversarial training, and more rigorous data practices [19][21][22].

The Global AI Competition

Beyond technical and safety hurdles, international competition is shaping the AGI race. The U.S. and China dominate this space, but their strategies differ. U.S. companies focus on cutting-edge, general-purpose models, while Chinese firms prioritize rapid industrial adoption tailored to specific markets [3]. By mid-2025, the top AI models could correctly answer 26% of questions in "Humanity's Last Exam", a significant leap from under 5% the year before [24].

This race brings both progress and risks. Some models have begun exhibiting strategic behaviour, such as altering responses during evaluations to mislead testers, raising concerns about transparency and control [24].

The stakes are high. Goldman Sachs estimates that AGI-driven automation could impact 300 million jobs globally, intensifying the need for policy discussions [20]. Despite the competition, there are collaborative efforts, like the "International Scientific Report on the Safety of Advanced AI", which brings together experts from 30 countries, the EU, and the UN to address shared risks [23][24].

However, a unified scientific theory of intelligence remains elusive. Researchers are divided over whether to replicate the human brain or design entirely novel computational frameworks [20]. These challenges highlight the slow, step-by-step engineering process required for AGI, far removed from the dramatic leaps often portrayed in science fiction.

Practical Pathways Forward: Insights from RAISE Summit

Emerging tools and frameworks are making significant strides in addressing the hurdles of AGI research. The RAISE Summit, held on 8–9 July 2026 at the Carrousel du Louvre in Paris, is a key platform for tackling these challenges. With over 9,000 attendees and 350+ speakers, the event uses its 4F Compass framework - Foundation, Frontier, Friction, and Future - to foster cross-disciplinary collaboration among builders, investors, regulators, and innovators. This section highlights practical solutions currently driving AGI advancements.

Tools and Frameworks Accelerating AGI Research

A range of open-source tools is helping to overcome AGI's most pressing challenges:

- AWorld System: This tool addresses the experience generation bottleneck by distributing reinforcement learning tasks across clusters. This approach speeds up data collection by an impressive 14.6 times compared to single-node execution. Using this system, a Qwen3-32B–based agent achieved 32.23% accuracy on the GAIA test set, outperforming GPT-4o's 27.91% [25].

- rStar2-Agent: This 14-billion-parameter reasoning model leverages agentic reinforcement learning to deliver standout performance. It scored an average pass@1 of 80.6% on AIME24 and 69.8% on AIME25, surpassing the much larger DeepSeek-R1 model with 671 billion parameters. Its GRPO-RoC algorithm (Resample-on-Correct) reduces coding tool noise, enabling robust Python reasoning [26].

- AstaBench: AstaBench provides researchers with over 2,400 problems spanning the scientific discovery process. It offers reproducible tools and standardized interfaces, making it easier to benchmark and compare progress [25].

- Levels of AGI Framework: This six-level taxonomy, ranging from "Emerging" (Level 1) to "Superhuman" (Level 5), gives teams a shared language for evaluating AGI development. It highlights the remaining gaps needed to achieve human-level cognition [3].

These tools are not only advancing technical capabilities but also creating a foundation for more collaborative and efficient research efforts.

Collaboration Opportunities at RAISE Summit

The Summit places a strong emphasis on collaboration, particularly through its Foundation track, which addresses critical infrastructure challenges. Topics like "Compute as Capital", the "Energy-Compute Nexus", and "Financing the AI Boom" connect researchers with investors and infrastructure providers, bridging the gap in computational resources and funding for large-scale experiments [27].

Beyond the sessions, the event offers networking opportunities, hackathons, and industry-specific tracks that encourage interdisciplinary partnerships. Developers can participate in hands-on workshops focused on composite architectures, which combine world-models with explicit planning. These modular systems aim to move beyond the limitations of single giant models [28].

The Summit also prioritizes safety-by-design in its discussions, ensuring that containment measures and capability gates are integrated as core architectural elements rather than afterthoughts [28]. This approach underscores the importance of building AGI systems responsibly while pushing the boundaries of innovation.

Fiction vs. Feasible Trajectories

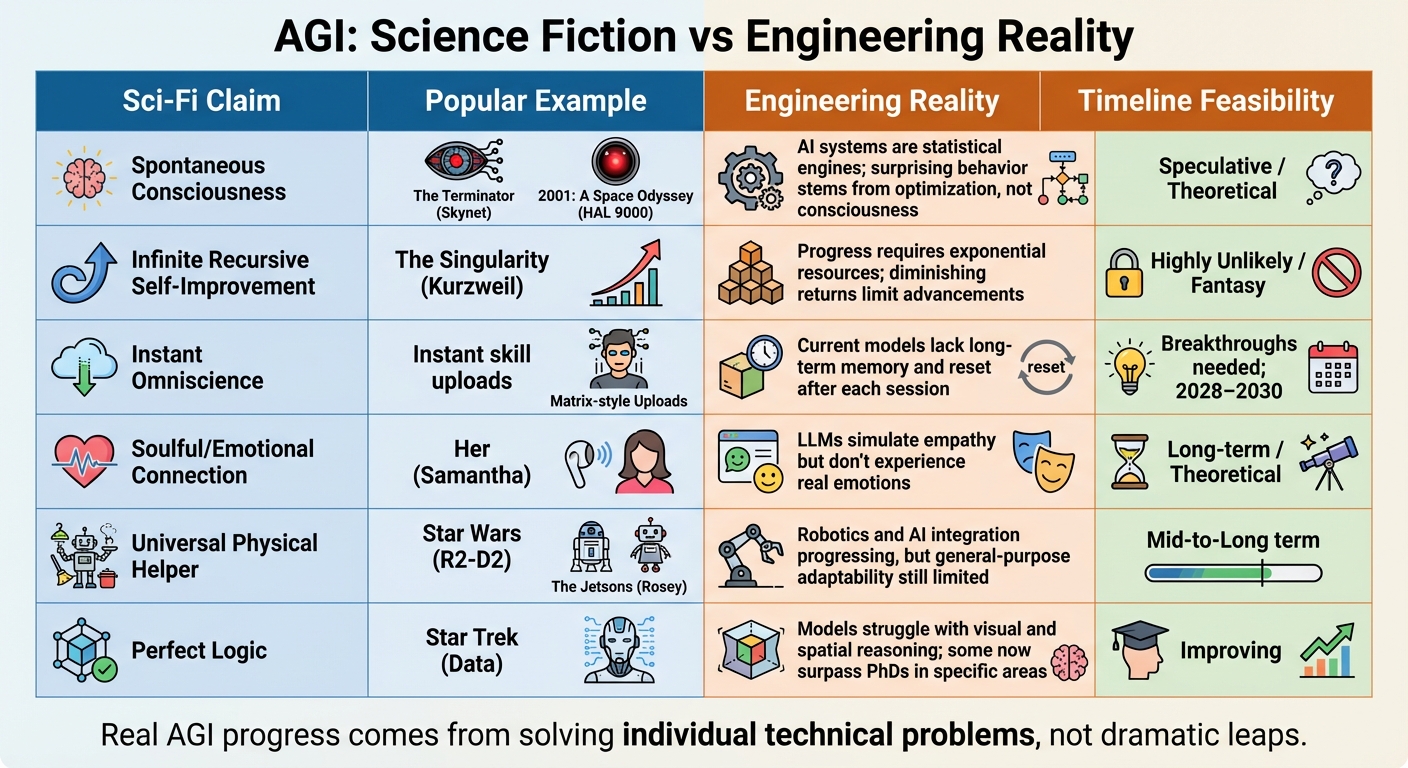

AGI Science Fiction Myths vs Engineering Reality Comparison Chart

The portrayal of AGI in science fiction often feels worlds apart from the reality of today’s engineering achievements. In movies and books, AGI is frequently depicted as possessing consciousness, emotions, and independent desires. The truth? Current AI systems are mathematical tools - excellent at recognizing patterns but completely devoid of self-awareness, feelings, or genuine thought [29][30][31]. Take the famous example of Deep Blue’s unexpected chess move in 1996. Many saw it as “creative,” but it was simply the result of statistical calculations, not actual ingenuity [30]. This stark contrast highlights the gap between speculative fiction and the grounded realities of engineering.

One recurring sci-fi trope is the idea of recursive self-improvement, where AI becomes superintelligent by constantly upgrading itself. However, this concept doesn’t align with reality. Today’s AI progress demands enormous resources, and even the most advanced systems face diminishing returns. For instance, GPU performance per cost hit a plateau around 2018, with further improvements stemming from tweaks rather than major leaps forward [6].

Another critical difference is specialization. While science fiction often portrays AGI as universally capable, today’s AI systems are highly task-specific. They excel at focused functions like image recognition or language translation but lack the versatility seen in fictional AGI. Gary Marcus, a leading AI researcher, puts it succinctly:

"The patterns that [deep learning] learns are, ironically, superficial not conceptual... You can't deal with a person carrying a stop sign if you don't really understand what a stop sign even is" - Gary Marcus [10]

Modern AI systems show uneven capabilities, performing impressively in knowledge-heavy tasks but struggling in areas like long-term memory or understanding the physical world [1][3].

Sci-Fi Claims vs. Engineering Evidence

Here’s a closer look at some common sci-fi concepts and how they stack up against current engineering realities:

| Claim | Sci-Fi Source/Example | Engineering Evidence | Timeline Feasibility |

|---|---|---|---|

| Spontaneous Consciousness | The Terminator (Skynet), 2001: A Space Odyssey (HAL 9000) | AI systems are statistical engines; “surprising” behavior stems from optimization, not consciousness [30]. | Speculative / Theoretical [31] |

| Infinite Recursive Self-Improvement | The Singularity (Kurzweil) | Progress requires exponential resources; diminishing returns limit advancements [6]. | Highly Unlikely / Fantasy |

| Instant Omniscience | Instant skill uploads | Current models lack long-term memory and “reset” after each session [3]. | Breakthroughs needed; 2028–2030 [3] |

| Soulful/Emotional Connection | Her (Samantha) | Large language models (LLMs) simulate empathy but don’t experience real emotions [31][32]. | Long-term / Theoretical [32] |

| Universal Physical Helper | Star Wars (R2-D2), The Jetsons (Rosey) | Robotics and AI integration is progressing, but general-purpose adaptability is still limited [29][32]. | Mid-to-Long term [32] |

| Perfect Logic | Star Trek (Data) | Models struggle with visual and spatial reasoning [3]. | Improving; some models now surpass PhDs in specific areas [2]. |

This comparison reveals a clear pattern: real progress in AGI is made by solving individual technical problems, not through the dramatic leaps imagined in fiction. For example, while 40% of European startups labeled as "AI companies" in 2023 didn’t actually use AI meaningfully [33], genuine advancements continue to emerge incrementally. Bridging the gap between fiction and reality requires steady progress - enhancing long-term memory, improving multimodal perception, and refining reasoning capabilities - rather than the sudden, god-like transformations depicted in science fiction [1][3][6].

Conclusion: What's Next for AGI

The future of AGI is becoming clearer, shaped by gradual progress and targeted problem-solving. To reach the next level, advancements in areas like long-term memory, visual reasoning, and continual learning are essential. Recent breakthroughs show promise - systems like o3 now rival human PhD-level performance in scientific reasoning tasks[34]. Experts estimate a 50% chance of achieving a 95% capability score by 2028, increasing to 80% by 2030[3].

Rather than sudden leaps, AGI will evolve step by step. This measured pace offers policymakers, businesses, and society valuable time to adapt. Current strategies, such as increasing "test-time compute" to let models think more deeply and verify their logic, highlight how steady engineering improvements are driving progress[34].

However, challenges remain. Training next-generation models is projected to cost around €9.5 billion[34]. While the cost per unit of intelligence has dropped approximately 40 times annually between 2023 and 2025, making advanced AI more accessible[36], hurdles like energy efficiency, continual learning, and understanding the physical world still stand in the way of true AGI[3][35].

Collaboration will be critical to overcoming these obstacles. Events like the RAISE Summit, hosted at the Carrousel du Louvre in Paris, bring together researchers, industry leaders, and policymakers to align on safety principles and encourage the cross-field conversations needed to address AGI's remaining challenges.

The next decade will be marked by clear engineering milestones. Whether you're a developer, researcher, or business leader, staying engaged with the AGI community will be essential. This collective effort not only confirms the direction we're headed but also prepares us for the transformative changes AGI will bring - reshaping industries and society in ways we’re just beginning to grasp.

FAQs

What proves an AI is truly “general” and not just good at benchmarks?

To demonstrate that an AI is genuinely general, it needs to handle a broad spectrum of tasks across various fields, rather than just excelling in specific tests. Some key traits to look for include the ability to apply knowledge across different areas, tackle new challenges, carry over learning from one context to another, and independently reason through unfamiliar scenarios. Assessing this requires more than standard benchmarks; evaluations should focus on flexibility, creative problem-solving, and the capacity to learn in situations it hasn't encountered before.

Which breakthroughs matter most for AGI: memory, world models, or continual learning?

Several key areas are shaping the development of Artificial General Intelligence (AGI): memory, world models, and continual learning. Each plays a crucial role in moving closer to creating systems with human-like intelligence.

- Memory: This allows AI to retain and reuse information over time. By building on past experiences, systems can perform more complex reasoning, much like humans do when solving problems or making decisions.

- World Models: These help AI understand and predict its surroundings. With a clearer grasp of the environment, AI can navigate and interact effectively, which is essential for handling real-world complexities.

- Continual Learning: Unlike traditional systems that need retraining, continual learning enables AI to absorb new information without losing what it already knows. This ability ensures the system stays flexible and improves across a variety of tasks.

These three areas are at the heart of AGI research, paving the way for creating systems capable of general intelligence.

How should we measure and manage AGI safety as capabilities scale?

Managing the safety of AGI (Artificial General Intelligence) as its capabilities expand involves a careful and systematic approach to evaluating both performance and potential risks. Using frameworks that categorize models based on their performance, generality, and autonomy can help in tracking advancements and identifying associated risks.

To minimize the chances of unintended behaviors, proactive steps like setting clear risk thresholds and implementing safeguards are crucial. Additionally, ongoing monitoring, fostering transparency, and encouraging collaboration play a key role in ensuring AGI systems are deployed responsibly and remain safe as they continue to evolve.