Generative AI is evolving fast, and the RAISE Summit 2025 in Paris showcased its latest trends and applications. Here’s a quick breakdown of key takeaways:

- Agentic AI: Autonomous systems are gaining traction, with tools like "agentic coding" streamlining complex tasks. Example: Lovable scaled to $75M ARR in 7 months.

- Ethics & Governance: Leaders discussed AI reliability concerns and frameworks for detecting and correcting misbehavior. Microsoft and UN collaborations aim to set global standards.

- Business Impact: CEOs predict AI agents could replace traditional management roles. Sovereign AI and token economies are reshaping industries.

- Tech Developments: Post-large language models and multi-modal AI are driving efficiency. Mistral’s Magistral model and tools like Hirundo.io’s machine unlearning are setting new benchmarks.

- Real-World Applications: From AI-powered customer service to healthcare tools like CliniKiosk, generative AI is delivering measurable results across sectors.

The summit highlighted the shift from experimentation to practical implementation, with a focus on efficiency, ethics, and global collaboration. The next RAISE Summit in July 2026 promises even more advancements.

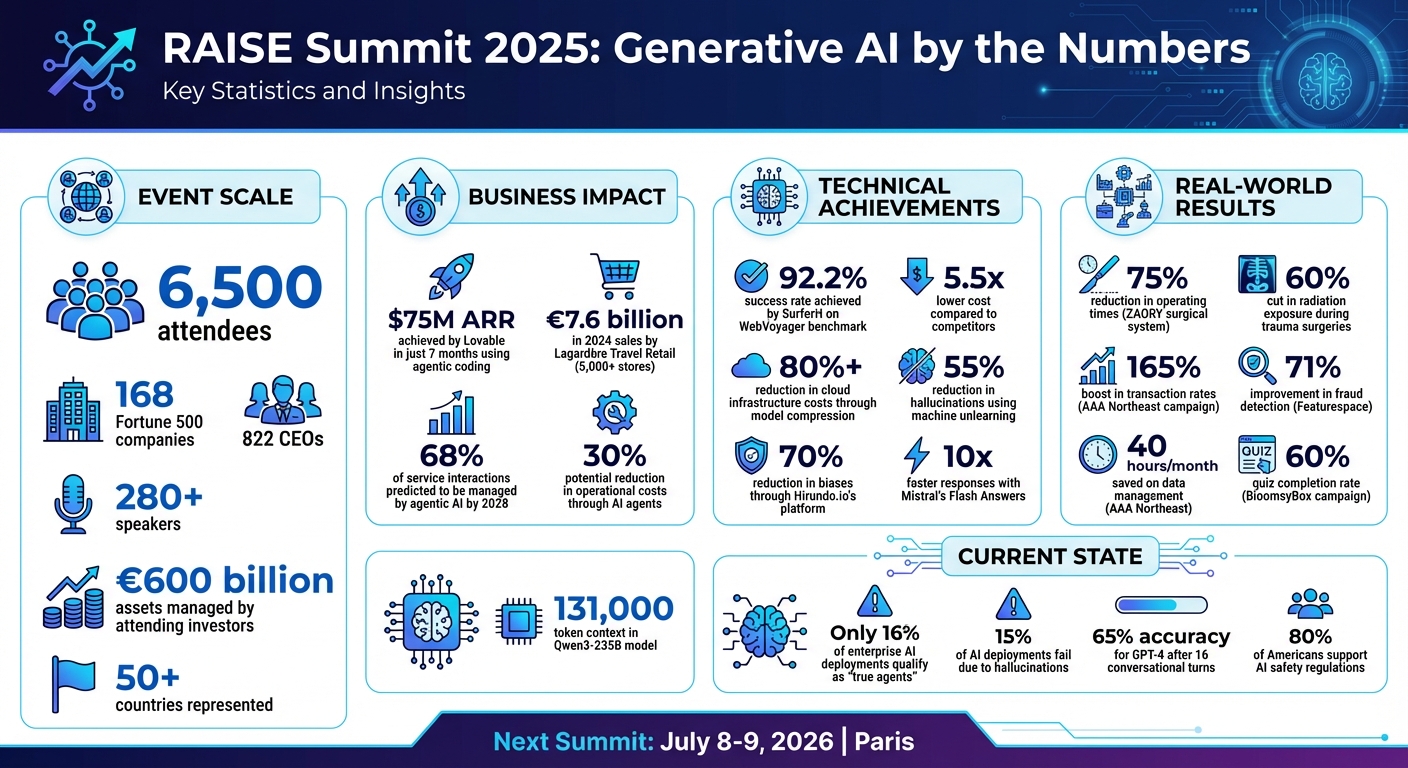

RAISE Summit 2025 Key Statistics and Impact Metrics

Raise Summit 2025, Paris, Panel "Orchestrating the Future: Agentic AI and Autonomous Workflows"

sbb-itb-e314c3b

Keynote Speeches: Industry Leaders on Generative AI

The RAISE Summit 2025 brought together an impressive group of 822 CEOs from 168 Fortune 500 companies, alongside investors managing over €600 billion in assets [9]. These keynote sessions tackled the creative, ethical, and strategic dimensions of generative AI, offering a glimpse into its transformative potential.

Generative AI in Creative Industries

Alison Wagonfeld, VP of Marketing at Google Cloud, delivered a keynote titled "Marketing in the AI Era" in July 2025. She explored how generative AI is reshaping marketing by automating content creation and tailoring customer experiences [3][1]. However, her session also spotlighted a key challenge: ensuring fair compensation for creators. Cloudflare echoed this concern, advocating for systems that reward creators when their work is used to train AI models [3][1].

Beyond marketing, speakers showcased how generative AI integrates text, image, and video outputs into seamless workflows, streamlining processes for creative teams. While these innovations hold immense potential, they also raise pressing ethical questions about responsible implementation.

Ethics and Responsibility in Generative AI

Atin Sanyal, Co-Founder and CTO of Galileo, highlighted a growing paradox in generative AI development:

"The irony of GenAI progress? Models are getting smarter - but leaders are losing confidence in shipping them." [1]

This "reliability gap" became a recurring theme across the summit. Discussions focused on moving beyond theoretical ethics to practical solutions, such as real-time frameworks that detect and correct AI misbehavior through autonomous interventions [8].

Microsoft representatives shared insights on shaping international standards through their work with the UN Secretary-General's Advisory Body on AI. They stressed the importance of a "whole-of-society approach" to governance [6][7].

The summit introduced the "4Fs" framework, with the "Friction" pillar addressing key issues like compliance, safety protocols, and public policy challenges. Technical talks showcased advancements in privacy engineering, including model compression techniques that reduce cloud infrastructure costs by over 80% while maintaining accuracy [8].

Generative AI in Business Strategy

Daniel Dines, CEO and Founder of UiPath, posed a provocative question:

"What if the CEOs of tomorrow don't manage people at all - but fleets of AI agents?" [1]

This idea reflects a shift toward autonomous AI agents handling tasks like coding, sales, and customer support [3][1]. Paul Bloch, Co-Founder of DDN, reinforced this vision, stating:

"AI is the Pivot - Data is the Power." [3]

Keynotes also underscored the importance of Sovereign AI, with companies like Nscale and BUZZ HPC detailing strategies for building "AI factories" that ensure compliance and maintain control within national borders [3][1]. These discussions highlighted concerns about data security, algorithmic independence, and national interests in AI development.

Another intriguing concept introduced was the "Token Economy" - a model where tokens become the foundation of AI-driven commerce and valuation. This approach illustrates how generative AI is reshaping both creative and operational strategies, influencing industries at every level.

New Technologies: Latest Generative AI Developments

New Models and Architectures

The field of generative AI is undergoing a transformation, with advancements that go beyond traditional large language models. These innovations are laying the groundwork for a new era of AI systems.

A key highlight from the summit was the shift toward what Naveen Rao of Databricks calls "post-large language models." These systems rely on autonomous agents to plan and execute multi-step tasks, moving beyond the static capabilities of earlier models [5]. UiPath illustrated this shift by showcasing how they are "agentifying" their software robots, turning automation into a network of self-governing agents [5]. Interestingly, only 16% of enterprise AI deployments currently meet the criteria for "true agents" - systems capable of planning, adapting, and executing independently - but this approach is quickly becoming the focus for future architectures [11].

Mistral AI introduced Magistral, a reasoning model tailored for domain-specific, multilingual tasks. Its standout feature, "Flash Answers" in Le Chat, delivers responses up to 10 times faster than many competitors [10]. Companies like Cerebras Systems and Groq are also pushing the boundaries of speed and efficiency. Their models, such as Qwen3‑235B, offer a massive 131,000-token context while cutting costs significantly - by up to one-tenth [4][5][12]. DataDirect Networks presented storage solutions designed for the immense demands of AI training, while Vultr emphasized its "sovereign cloud" infrastructure. This approach ensures enterprises can scale AI while adhering to local data regulations, signaling a move toward production-ready AI systems [5].

As these agentic architectures evolve, the summit also spotlighted the rise of multi-modal systems - technologies that seamlessly integrate diverse media types into a single framework.

Multi-Modal AI: Text, Image, and Video Integration

One of the summit's key themes was multi-modal AI, which focuses on combining text, images, videos, music, and even 3D objects into unified systems [13][2]. This progress is powered by techniques like self-supervised learning, mixture of experts, reinforcement learning from human feedback, and chain-of-thought prompting [13].

A standout example came from H Company, which open-sourced SurferH, a browser-based automation agent. When paired with their Holo‑1 model, this system achieved a 92.2% success rate on the WebVoyager benchmark while operating at 5.5 times lower cost than leading competitors [4]. Bright Data also contributed by offering its Web Archive - a collection of 200 billion cached webpages - for AI training and search purposes [4].

The summit also showcased practical applications of these technologies. Drill Surgeries demonstrated ZAORY, an AI-powered surgical guidance system that reduced operating times by 75% and cut radiation exposure by 60% during trauma surgeries [4]. Iridesense applied advanced 3D multispectral LiDAR to enable precise, real-time analysis of plant health and soil moisture, revolutionizing precision agriculture [4]. Hirundo.io, meanwhile, captured attention with its machine unlearning platform, which won the "RAISE the Stakes" competition. This system enables AI models to "forget" specific data points, reducing hallucinations by 55% and biases by 70% during testing [4]. This innovation addresses critical concerns around data privacy and reliability, offering a practical path to more accurate and trustworthy AI systems.

Panel Discussions: Challenges and Opportunities

Scaling Generative AI Across Industries

A recurring topic in the panels was the struggle to scale generative AI in enterprise settings. One major roadblock? Infrastructure limitations. Around 15% of AI deployments fail due to issues like hallucinations, where models generate inaccurate or misleading information [15]. Adding to the complexity, companies are grappling with GPU shortages and resorting to unapproved external APIs - referred to as "shadow AI" - which introduces significant security risks [14].

Paddy Srinivasan, CEO of DigitalOcean, shared his perspective on regulation:

"We just need to let the free market ecosystem play itself out... Of course, there needs to be just enough regulation to make sure that we're not going off the reservation, but I feel this is not the time to really clamp down." [16]

Another challenge is maintaining context in conversations at scale. For example, even advanced models like GPT-4 achieve only about 65% accuracy after 16 conversational turns. However, companies like Teneo.ai have demonstrated success by managing over 10 million monthly interactions with sub-second response times [15]. Experts suggested that Retrieval-Augmented Generation (RAG) could help address these issues by enabling models to use specific, up-to-date enterprise data. This approach reduces hallucinations and improves domain-specific accuracy [21, 23].

Infrastructure optimisation also took center stage. Panellists promoted strategies like GPU-as-a-Service (GPUaaS) and Models-as-a-Service (MaaS). For instance, using vLLM, an open-source inference runtime, can cut GPU usage by 2 to 3 times while maintaining 99% accuracy for models like Llama and Mistral [14]. Additionally, centralised model deployment - building on advancements in agentic design - enables teams to access shared models via APIs. This approach prevents version drift and eliminates redundant resource usage [14].

These infrastructure challenges naturally lead to broader concerns about data privacy and security.

Data Privacy and Security in Generative AI

Balancing rapid innovation with regulatory compliance was another hot topic. With the European Union's AI Act set to impose new requirements for general-purpose AI models by August 2025, panellists debated how best to strike a balance between oversight and market freedom [16].

Illia Polosukhin, Co-founder of NEAR Protocol, argued for technical solutions over government-led regulation:

"Regulation by government is probably not the right instrument... Decentralisation, encryption, and confidential computing - which protects data during processing - offer a more reliable security framework than what governments provide." [16]

Interestingly, despite concerns about over-regulation, 80% of Americans support implementing rules to ensure AI safety, even if it might slow innovation [16]. Confidential computing emerged as a key technology for addressing enterprise worries about data leakage and compliance. This approach safeguards data during active AI processing. Sarah Franklin, CEO of Lattice, pointed out another challenge:

"Without clear definitions and widespread education, fear and uncertainty will prevail." [16]

Practical solutions discussed in the panels included setting up data anonymisation pipelines through differential privacy or synthetic data generation. Federated learning was recommended for international operations dealing with diverse privacy laws, while Industrial Knowledge Graphs were highlighted as a way to organise and connect scattered data into structured, usable formats [22, 23].

While robust technical frameworks are crucial, the human factor - through education and effective change management - was repeatedly emphasised as equally important for successful AI implementation.

Practical Applications: Case Studies from the Summit

Improving Customer Experiences

At the RAISE Summit, businesses unveiled how generative AI is moving beyond basic chatbot features to deliver impactful customer service solutions. Parloa, a European AI unicorn, showcased its advanced AI platform for customer support. CEO Malte Kosub and OpenAI's Laura Modiano demonstrated how the platform autonomously handles complex customer queries, going far beyond pre-scripted responses to deliver real solutions [3].

AAA Northeast shared impressive results from using Adobe's AI Assistant. Their team cut 40 hours per month on data schema management and saved an additional 36 hours on audience hygiene tasks. In one car rental campaign, they achieved a 165% boost in transaction rates while targeting only a third of their original audience [19]. Similarly, BloomsyBox used an AI-powered quiz bot during a Mother's Day campaign, achieving a 60% completion rate, a 78% prize claim rate, and 38% customer engagement for creating custom greetings [21].

The financial sector also brought forward transformative AI use cases. Featurespace introduced its TallierLTM™ generative AI model, which analyses transaction histories to detect fraudulent activities. This model improved fraud value detection by 71% compared to previous benchmarks [21]. Meanwhile, MetLife adopted AI to analyse customer emotions in real time during calls, leading to a 3.5% increase in first-call resolutions and a 13% rise in customer satisfaction scores [21].

These innovations demonstrate how generative AI is reshaping customer interactions across industries, paving the way for even more sector-specific transformations.

Healthcare and Life Sciences Applications

The healthcare and life sciences sectors showcased how AI is accelerating advancements in drug discovery and clinical applications. GSK and Cerebras revealed their "Molecules at Wafer-Scale" project, which uses ultra-fast inference and specialised hardware to speed up life sciences research significantly [3]. Sanofi, collaborating with Snowflake, presented an AI-driven strategy covering the entire pharmaceutical lifecycle - from molecule discovery to market delivery - highlighting how AI optimises every stage of development [3].

Clinical applications also took center stage. Researcher Ela Adhikari introduced CliniKiosk, a digital health kiosk powered by AI that delivers real-time, multilingual health assessments. By analysing demographics and medical history, it provides personalised and empathetic recommendations tailored to diverse communities [18]. Doctolib demonstrated how AI transforms unstructured healthcare data into actionable insights, while the Mayo Clinic showcased advancements in personalised medicine by integrating AI with genomic data [3].

Generative AI is also reshaping the retail landscape. Lagardère Travel Retail, operating over 5,000 stores across 50+ countries and generating €7.6 billion in 2024 sales, introduced "Lumina", a custom AI tool developed with Artefact and Microsoft. Lumina analyses landlord requests and accelerates the creation of tender books. CEO Dag Rasmussen explained:

"AI enables precise analysis of landlord requirements to streamline RFP responses" [17].

These examples illustrate how generative AI is driving progress across various sectors, from healthcare to retail, by addressing specific challenges and delivering measurable results.

Conclusion: What's Next for Generative AI

The discussions and breakthroughs showcased at RAISE Summit 2025 leave little doubt: generative AI is shifting from theoretical experimentation to practical implementation. With 6,500 attendees and over 280 speakers convening in Paris, the event highlighted how AI is evolving beyond basic chatbots into autonomous, multi-step agents capable of more complex tasks [4]. As the summit’s co-founders - Michael Amar, Henri Delahaye, and Hadrien de Cournon - remarked:

"For our second edition, RAISE Summit has again shown that it is the premier event for B2B professionals seeking to disrupt, build, and connect in the AI landscape, enabling visitors, speakers and exhibitors to validate their AI strategies through real‐world use cases" [4].

The road ahead for generative AI is focused on several priorities. Treating computational resources as strategic assets is becoming critical, alongside embedding ethical principles directly into AI systems. A standout example is Hirundo.io's approach to "machine unlearning", which has successfully reduced hallucinations by 55% and biases by 70% [4]. Open-source collaboration also plays a growing role, with partnerships driving AI advancements at a fraction of the cost compared to proprietary solutions [4].

Looking forward, industry projections suggest a major shift in service interactions: by 2028, 68% of these will be managed by agentic AI, potentially slashing operational costs by 30% [20]. As Eric Schmidt aptly described:

"the fastest‑growing AI Tech conference in Europe, and maybe in history" [2].

The next RAISE Summit, set for 8–9 July 2026 in Paris, promises to further explore how these insights will translate into tangible advancements, reinforcing the event's role as a catalyst for transformative AI strategies [4].

FAQs

What defines a 'true agent' AI system in the enterprise?

A 'true agent' AI system stands out because it can make decisions on its own and take proactive, context-sensitive actions. Unlike reactive systems that only respond to specific triggers, these agents can adjust to changing environments, handle complex workflows, and complete tasks without needing constant human oversight.

What sets them apart? Their ability to reason, learn, and collaborate across different systems. This makes them highly effective in supporting enterprise processes. By seamlessly connecting knowledge to action, these AI systems have the potential to reshape how operations are managed.

How can companies reduce AI hallucinations without slowing deployment?

Companies can reduce AI hallucinations without slowing down deployment by adopting retrieval-augmented methods, which involve integrating external, verified data sources to support AI responses. Effective prompt engineering ensures that instructions given to the model are precise and reduce ambiguity. Additionally, implementing layered safety measures adds extra checkpoints to prevent errors.

To further boost reliability, continuous monitoring of AI outputs is essential. Techniques like tree search algorithms allow the system to explore multiple reasoning paths, while self-evaluation mechanisms enable models to review and refine their own outputs for better accuracy and grounding. Together, these strategies create a balance between speed and dependability.

What is “machine unlearning,” and when should it be used?

Machine unlearning is a method in artificial intelligence designed to remove specific data points from a trained model. The goal? To address privacy, legal, or ethical concerns. By erasing personal or sensitive information, it helps ensure compliance with regulations like the GDPR.

What makes this approach stand out is its efficiency. Instead of retraining the entire model from scratch - a process that can be time-consuming and resource-intensive - machine unlearning targets specific data. This is especially useful when retraining isn't feasible and when formal guarantees are required to confirm that the removed data no longer affects the model in any way.